My Nextcloud Dev Setup

Submitted by blizzz

(Sorry, this one is a bit of a longer post. Perhaps goes best with a cup of coffee or a bottle of Club Mate ;) )

Nextcloud can be run in combination with different software stacks, starting with the PHP runtime, to web servers, databases, memcache servers (or without), file storages and so on.

And so there are different ways how one can setup their Nextcloud development setup. The developer documentation provides a basis for this. A lot of devs are using Julius’s docker setup so they only need to mount their code.

I am going for a mostly local setup running on good old

evergreen Arch Linux. This setup allows for:

- multi-tenancy – having Nextcloud instances in parallel

- multi-runtime - having various PHP version available at the same time

- TLS enabled – easy and pragmatic certificates

- containerless - but you can add dockered components if you like or need

Mind, this is a development setup and in no way supposed to be used productively or exposed to the Internet. All of the installed services are configured to listen to localhost connections only!

Branch based code checkouts

The development process in the Nextcloud server git repository is built around the default branch and the stable branches for each Nextcloud series. The branches for the current series are stable29 and stable28 while the work on the upcoming version happens on master. The branch out to the next stable30 is going to happen with the first release candidate.

Typically a change is merged into the master branch first and the backported – adjusted if required – to the targeted stable branches. Sometimes fixes are only relevant to older branches, when the code has diverged. Then they go against the affected stable branch directly. It is also useful when debugging a specific version.

Knowing this it makes sense to obviously have the master branch cloned, but also relevant stable branches. I am running an instance for each of them.

Instead of cloning each branch, I take advantage of git’s worktree feature. The .git directory in the default branch remains central, all others become files pointing to the default one.

git worktree list … /srv/http/nextcloud/stable27 08cd95790a4 [stable27] /srv/http/nextcloud/stable28 fd066f90a59 [stable28] /srv/http/nextcloud/stable29 ed744047bde [stable29]

Apps

Apart of the server, there is also a range of apps that I am working on. Some of them are bundled with the server repository, but most have their own repository. I keep them separated in different app directories to keep some order:

- apps: only the apps from the server repository

- apps-web: for apps installed from the app store

- apps-repos: for direct checkouts

- apps-isolated: also cloned apps, but bind-mounted from elsewhere

The apps-isolated is an edge case addressing a trouble i was having with one app and its npm build. The npm tool has the behaviour to look for dependencies in the parent folders, and it was conflicting with path. Having it outside of the nextcloud server tree solves the problem. Actually, I could do this with in generall with apps-repos instead and have just one folder.

This requires a configuration in each instance’s config.php:

'apps_paths' => array ( 0 => array ( 'path' => '/srv/http/nextcloud/master/apps', 'url' => '/apps', 'writable' => false, ), 1 => array ( 'path' => '/srv/http/nextcloud/master/apps-repos', 'url' => '/apps-repos', 'writable' => false, ), 2 => array ( 'path' => '/srv/http/nextcloud/master/apps-web', 'url' => '/apps-web', 'writable' => true, ), 3 => array ( 'path' => '/srv/http/nextcloud/master/apps-isolated', 'url' => '/apps-isolated', 'writable' => false, ), ),

Only the apps-web folder is marked as writable, so apps installed from the app store will only end up there.

I am using git worktrees with the apps as well. Those that I need on older Nextcloud versions get also a worktree located in that folder, for example:

$ git worktree list /srv/http/nextcloud/master/apps-repos/flow_notifications 9c34617 [master] … /srv/http/nextcloud/stable27/apps-repos/flow_notifications be83bda [stable27] /srv/http/nextcloud/stable28/apps-repos/flow_notifications eefe903 [stable28] /srv/http/nextcloud/stable29/apps-repos/flow_notifications 9979422 [stable29]

In this example is an app, that also works with stable branches for each Nextcloud major version. But there are also others with branches compatible with a range of Nextcloud server version. There, I add worktree towards the highest supported version. I fill gaps again with bind mounts:

sudo mount --bind /srv/http/nextcloud/master/apps-repos/user_saml /srv/http/nextcloud/stable29/apps-repos/user_saml

Bind mounts are easier to work with in PhpStorm as they appear and are treated as normal folders. Symlinks however would also be opened in their original position, and PhpStorm will consider them not belong to the open project.

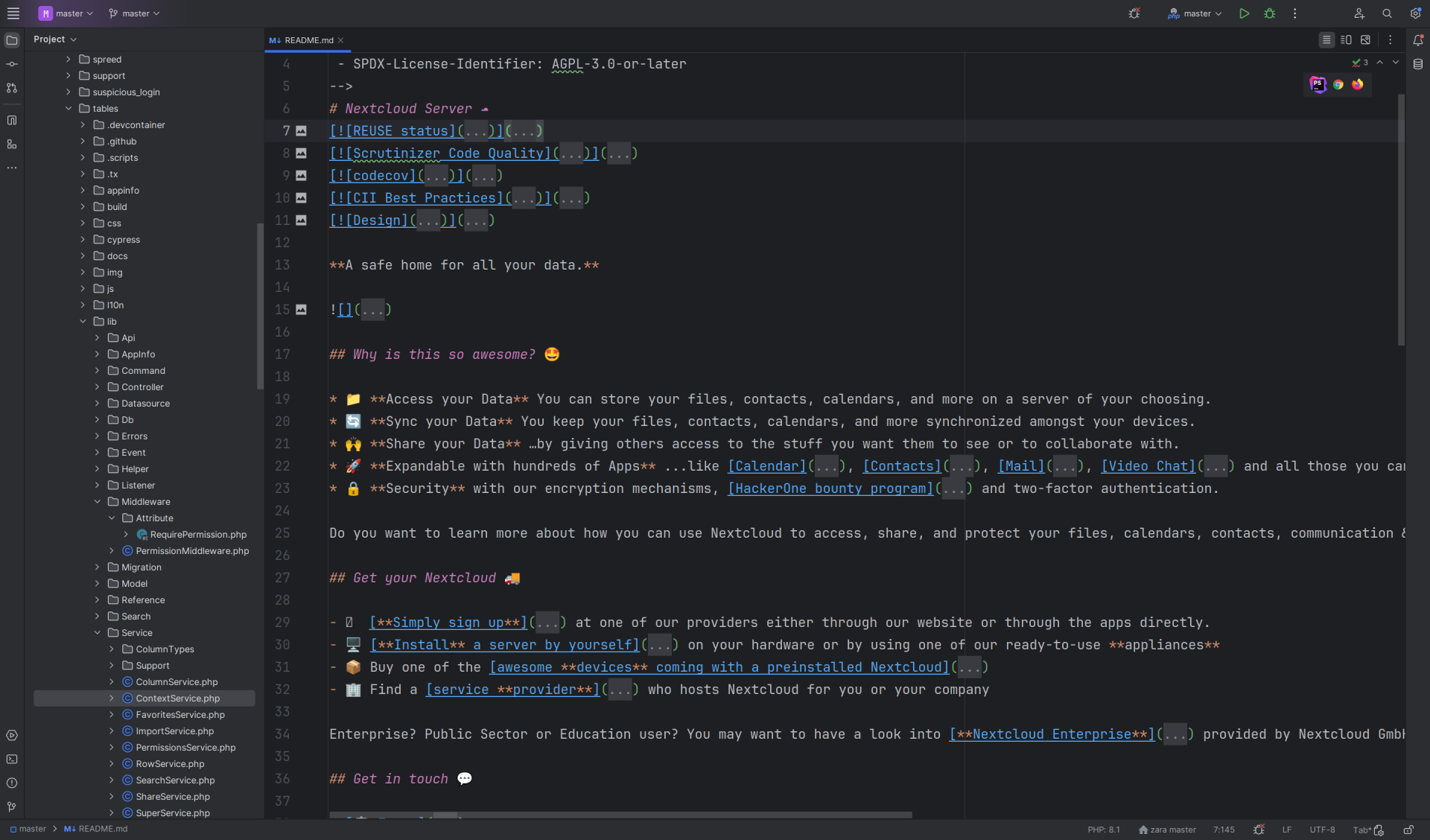

PhpStorm is the IDE of choice and there I also have a project for each stableXY and the master checkout.

PHP runtime

Using Arch I can always run the latest and greatest PHP version. That mostly works against master, but already Nextcloud 27 is not compatible with PHP 8.3.

Fortunately there is el_aur providing older PHP versions via AUR. They fetch the PHP and modules sources and compile on the machine, so updates may take a bit, but it allows to run them in parallel as they do not conflict with the current PHP version.

Later on for accessing Nextcloud via browser it is necessary to have the php*-fpm packages installed.

Effectively, it is “just” building the old PHP versions an Arch.

Web server and TLS

Now a web server is fancy, to reach all the Nextcloud versions in the browser with all the PHP versions supported.

I settle for Apache2 and have virtual hosts defined for each PHP version. For historical reasons I set up a domain in the form of nc[PHPVERSION].foobar (where foobar was my computers name back then). So I yield nc.foobar, nc82.foobar, nc81.foobar and nc74.foobar.

Each virtual host is configured like that:

<VirtualHost *:443>

ServerAdmin webmaster@localhost

ServerName nc82.foobar

DocumentRoot /srv/http/nextcloud/

Include conf/extra/php82-fpm.conf

RequestHeader edit "If-None-Match" "^\"(.*)-gzip\"$" "\"$1\""

RequestHeader edit "If-Match" "^\"(.*)-gzip\"$" "\"$1\""

Header edit "ETag" "^\"(.*[^g][^z][^i][^p])\"$" "\"$1-gzip\""

<Directory /srv/http/nextcloud/>

Options Indexes FollowSymLinks MultiViews

AllowOverride All

Order allow,deny

allow from all

Require all granted

</Directory>

LogLevel warn

ErrorLog /var/log/httpd/error.log

CustomLog "|/usr/bin/rotatelogs -n3 -L /var/log/httpd/access.log /var/log/httpd/access.log.part 86400" time_combined

KeepAlive On

KeepAliveTimeout 100ms

MaxKeepAliveRequests 2

SSLEngine on

SSLCertificateFile /path/to/.local/share/mkcert/nc82.foobar.pem

SSLCertificateKeyFile /path/to/.local/share/mkcert/nc82.foobar-key.pem

</VirtualHost>

The variable parts are the ServerName, the included php-fpm configuration, and the path to the TLS certificate.

The document root points to the general parent folder, so I access all instances through a sub directory, e.g. https://nc82.foobar/stable29 or https://nc.foobar/master

To deal with name resolution, I simply add the domain names to the /etc/hosts.

cat /etc/hosts # Static table lookup for hostnames. # See hosts(5) for details. 127.0.0.1 localhost ::1 localhost 127.0.1.1 foobar foobar.localdomain nc.foobar nc74.foobar nc81.foobar nc82.foobar cloud.example.com

And I have cloud.example.com! That is a plain instance just installed from the release archive for documentation purposes (taking screenshots with a vanilla theme) and such.

For TLS certificates I take advantage of mkcert. mkcert is a tool by Filippo Valsorda that makes it damn easy to create trusted self-signed certificates. It creates a local Certificate Authority and imports it system-wide. You can create certificates for any of your domains and wire them in the web server, reload it, done. Your browser will be happy.

Finally, I keep the web server configured to only to listen to local addresses, so that is is not available externally (firewall or not).

Database

MySQL is wide-spread, but Nextcloud also supports PostgreSQL. It seems also to have some nicer defaults and less hassle in administrating it. Also, many devs already work with MySQL. So, I settle for PostgreSQL.

By connecting through a unix socket, I do not even need stupid default passwords. I just create a database and user up front, before installing the Nextcloud instance, and done.

sudo -iu postgres createuser stable29 sudo -iu postgres createdb --owner=stable29 stable29

The database shell is just a sudo -iu postgres psql away and there is a quick cheat sheet “PostgreSQL for MySQL users”, that provides the basic information and is all needed for the first step into the cold water. The rest comes naturally on the way.

Memcache

I run Redis locally (and should transition to redict and also access it via unix socket. To do so, the web user, here http, has to be part of the redis group so that the web server and thus Nextcloud can open a connection.

The entries in the config.php are then simply:

'memcache.distributed' => '\\OC\\Memcache\\Redis',

'memcache.locking' => '\\OC\\Memcache\\Redis',

'redis' =>

array (

'host' => '/var/run/redis/redis.sock',

'port' => 0,

'timeout' => 0.0,

),

In order to run redis as unix socket, the port has to be set to 0 in /etc/redis/redis.conf. The socket path and permissions should be double checked to battle common first connection problems.

There should not be a material difference in redict, apart of the name, especially in the path and system group.

Some time ago, I also had to debug a case where memcached was used. In this volatile use case I have used memcache in a docker container instead and configured that. This can be thrown away, and quickly re-introduced again if ever necessary, but that’s not the daily bread.

Permissions

Remember that Nextcloud was cloned into a directory that actually belong to http and not to the actual user?

This setup requires that both http and USER * canreadallfilesandwritetosomeofthem. * http * mustbeabletowriteinto * data*, * config * and * apps − web*,*USER should be able to write anywhere.

Instead of adding $USER to the http group (does not feel right), I make use of Linux ACLs.

/srv/http/nextcloud is owned by $USER, but is generally readable.

For /srv/http/nextcloud/{master,stableXY} it is the same, but the defaults are also set that $USER will always have all access, e.g. when Nextcloud creates files in the data directory. Primary goal is to read or reset the nextcloud.log without hassles.

The annoying thing is that Nextcloud is very sensitive about config/config.php and overrides permissions, so using sudo to modify the config is necessary, but survivable.

occ

Using occ I was bored of typing sudo -u http so I had a short-cut for this. But then I was also getting tired of writing suww php occ and figuring out the right php version depending on which branch i was. So I created a script that will found it out for me, and I can finally only run occ – which is what the script is called – and am good wherever I am:

#!/usr/bin/env bash

# Require: run from a Nextcloud root dir

if [ ! -f lib/versioncheck.php ]; then

echo "Enter the Nextcloud root dir first" > /dev/stderr

exit 255;

fi

# Test general php bin first

if php lib/versioncheck.php 1>/dev/null ; then

sudo -u http php occ $@

exit $?

fi

# Walk through installed binaries, from highest version downwards

# ⚠ requires bfs, which is a drop-in replacement for find, see https://tavianator.com/projects/bfs.html

BINARIES+=$(bfs /usr/bin/ -type f -regextype posix-extended -regex '.*/php[0-9]{2}$' | sort -r)

for PHPBIN in ${BINARIES}; do

if "${PHPBIN}" lib/versioncheck.php 1>/dev/null ; then

sudo -u http ${PHPBIN} occ $@

exit $?

fi

done

echo "No suitable PHP binary found" > /dev/stderr

exit 254

It simply tries out the available php binaries against the lib/versioncheck.php and takes the first one that exits with a success code.

Other components

Just briefly:

I run OpenLDAP locally, and this is typically configured in my instances. It has a lot of mass-created users, and allows to create a lot of odd configurations quickly, because creative things are out in the wild. Having them in different subtrees of the directory helps to keep them apart.

Further I have a VM (using libvirtd/qemu) with a Samba4-server that not only offers for SMB shares (don’t want that locally), but also acts as Active Directory compatible LDAP server. I don’t need it often though.

Then, there is a Keycloak docker container in use to have a SAML provider on the machine (but I also have accounts at some SaaS providers, though I cannot really imagine why you would ever want to outsource authentication and authorization).

If necessary I spin up a Collabora CODE server, which then also runs locally, but only on demand.

Ready for takeoff

When I am ready to (test my) code, I execute devup and it does something like this:

sudo mount --bind /srv/http/apps/dev /srv/http/nextcloud/master/apps-isolated sudo mount --bind /srv/http/apps/26 /srv/http/nextcloud/stable26/apps-isolated sudo mount --bind /srv/http/nextcloud/stable27/apps-repos/user_saml /srv/http/nextcloud/stable25/apps-repos/user_saml sudo mount --bind /srv/http/nextcloud/master/apps-repos/user_saml /srv/http/nextcloud/stable29/apps-repos/user_saml sudo systemctl start redis sudo systemctl start docker || true sudo systemctl start slapd sudo systemctl start postgresql sudo systemctl start php-fpm sudo systemctl start php82-fpm.service sudo systemctl start php74-fpm.service sudo systemctl start httpd sudo systemctl start libvirtd

Essentially I set up the bind-mounts first, and later on start related services. There is a devdown script as well, that stops the services and unmounts the mounts.

Advantages and pitfalls

This setup allows of development across various branches and their different requirements without fiddling with other services or components, apart of some initial setup (e.g. new server worktree) or occassional routine tasks (e.g. new PHP version). Instances are set up ones, but otherwise persistent.

By being persistent it allows some undirected, but closer to reality testing: different configurations, combination of apps, and in situ data let you let you uncover effects, that otherwise may not happen, if you have a very clean, very minimal installation. In-series upgrades may make you aware of problems that arise and are not detected by automated tests.

Having varying developer setups across the developer base is inherent testing of various possibles ways of composing the stack. For instance both a colleague and I have stumbled across different database behaviour in MySQL or Postgres respectively and could address them before a release.

Lastly, setting up the different components of the stack makes one being more involved in them. When it is not ready-to-run off the shelf, one is going to understand better how to this stuff works – and it is helpful to have a good idea of the foundations of the stack.

Each medal has two sides of course. This situation sometimes is also a source for PEBCAK moments. The federated sharing of addressbooks did not work because of some odd race condition in seting up the trusted relationship between server – but because I disabled receiving shares while testing some case a bit ago.

Or, structural changes are done, but maybe reverted (for example in database schema). While nothing would happen in a regular update, the intermediate change might be effective and has to be reverted manually. And you need to be aware about it. I happens once every three years maybe, and if you are unlucky enough to run into this situation, but it might happen.

Occasionally, I do not have that need to often, I setup an ad-hoc instance on a memdisk (/dev/shm/). The use case is either to closely replicate a configuration for debugging reasons, or to investigate an issue during upgrading. Especially then it is easy to roll back (initialize a git repo and use sqlite if possible – with other DBs they have to be dumped).

This is the setup that has crystallized and improved over time since I switch to Arch Linux (via Antergos back then – was it 2016?). I am comfortable with it, and I would call it battle-tested. Perhaps it is not the best choice for someone only interesting into developing a couple of apps, it has benefits with a server focus or a bunch of server experience.

Add new comment